Asymptotic notation

Big-Θ(Big-Theta) notation

Logarithms grow more slowly than polynomials. That is, Θ(log2n) grows more slowly than Θ(na) for any positive constant a. But since the value of log2n, increases as n increases, Θ(log2n) grows faster than Θ(1).

The following graph compares the growth of 1, n, and log2n:

Here's a list of functions in asymptotic notation that we often encounter when analyzing algorithms, ordered by slowest to fastest growing:

Θ(1)

Θ(log2n)

Θ(n)

Θ(nlog2n)

Θ(n2)

Θ(n2log2n)

Θ(n3)

Θ(2n)

Θ(n!)

Note that an exponential function a^nana, start superscript, n>1, grows faster than any polynomial function nb, where b is any constant.

A function has "constant" growth if its output does not change based on the input, the n. The easy way to identify constant functions is find those that have no n in their expression anywhere, or have n0, In this case, 1 and 1000 are constant.

A function has "linear" growth if its output increases linearly with the size of its input. The way to identify linear functions is find those where n is never raised to a power (although n1is OK) or used as a power. In this case, 3n and (3/2)n are linear.

A function has "polynomial" growth if its output increases according to a polynomial expression. The way to identify polynomial functions is to find those where n is raised to some constant power. In this case, 2n3 and 3n2 are polynomial.

A function has "exponential" growth if its output increases according to an exponential expression. The way to identify exponential functions is to find those where a constant is raised to some expression involving n. In this case, 2n and (3/2)n are exponential.

We have several different types of functions here, so we start by thinking about the general properties of those function types and how their rate of growth compares. Here's a reminder of different function types shown here, in order of their growth:

Constant functions

Logarithmic functions

Linear functions

Linearithmic functions

Polynomial functions

Exponential functions

Which type is each of the presented options?

Constant functions:

64

Logarithmic functions

log8n, log2n

Linear functions

4n

Linearithmic functions

nlog2n, nlog8n

Polynomial functions

8n2, 6n3

Exponential functions

82n

Within the logarithmic functions, the lesser bases grow more quickly than the higher bases - so log2n will grow more quickly than log8n. You can see that in the graph below:

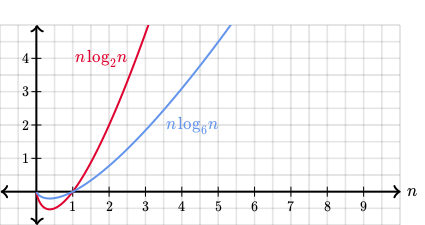

The linearithmic functions are those that multiply linear terms by a logarithm, of the form nlogkn. With the nnn being the same in both, then the growth is dependent on the base of the logarithms. And as we just stated, the lesser bases grow more quickly than the higher bases - so nlog2n will grow more quickly than nlog6n. You can see that in the graph below:

Within the polynomial functions, 8n2 will grow more slowly than 6n3 since it has a lesser exponent. We don't even have to look at the constants in front, since the exponent is more significant.

To conclude, the correct order of the functions would be:

64

log8n

log2n

4n

nlog6n

nlog2n

8n2, 6n3

82n

Big-O notation

If a running time is O(f(n)), then for large enough n, the running time is at most k⋅f(n) for some constant k. Here's how to think of a running time that is O(f(n)):

We use big-O notation for asymptotic upper bounds, since it bounds the growth of the running time from above for large enough input sizes.

Big-Ω (Big-Omega) notation

Sometimes, we want to say that an algorithm takes at least a certain amount of time, without providing an upper bound. We use big-Ω notation; that's the Greek letter "omega."

If a running time is Ω(f(n)), then for large enough n, the running time is at least k⋅f(n) for some constant k. Here's how to think of a running time that is Ω(f(n)):

We say that the running time is "big-Ω of f(n). We use big-Ω notation for asymptotic lower bounds, since it bounds the growth of the running time from below for large enough input sizes.

Just as Θ(f(n)) automatically implies O(f(n)), it also automatically implies Ω(f(n)). So we can say that the worst-case running time of binary search is Ω(log2n).

Last updated